Singularity and Apptainer Containers

On CINECA’s HPC clusters, the containerization platforms available can be eighter Singularity or Apptainer.

Both containerizaion platforms are specifically designed to run scientific applications on HPC resources, enabling users to have full control over their environment. Singularity and Apptainer containers can be used to package entire scientific workflows, software, libraries and data. This means that you don’t have to ask your cluster admin to install anything for you - you can put it in a Singularity or Apptainer container and run. The official Singularity documentation for its last release is available here while the official Apptainer documentation for its last release is available here.

Differences between Singularity and Apptainer

In this section basic information about the history of the Singularity project are provided in order to help users which have no prior experience with both Singularity and Apptainer to better understand the differences between those platforms.

The Singularity project first begun as an open-source project in 2015 form a team of researchers at Lawrence Berkeley National Laboratory lead by Gregory Kurtzer.

In February 2018, the original leader of the Singularity project founded the Sylabs company to provide commercial support for Singularity.

In May 2020, Gregory Kurtzer left Sylabs but retained leadership of the Singularity open source project: this event cause a major fork inside the Singularity project.

In May 2021 Sylabs made a fork of the project and called SingularityCE while in November 30, 2021 when the move into the Linux Fundation of the Singularity open-source project has been announced the Apptainer project born.

Currently, there are three products derived from the original Singularity project from 2015:

Singularity: the commercial software supported by Sylabs.

SingularityCE: the open-source, community edition software also supported by Sylabs.

Apptainer: the fully open-source Singularity port under the Linux Fundation.

From a user perspective, quoting the announcement of the beginning of the Apptainer project: “Apptainer IS Singularity”.

The Apptainer project makes no changes at the image format level. This means that default metadata within Singularity Image Format (SIF image) and their filesystems will retain the Singularity name without change ensuring that containers built with Apptainer will continue to work with installations of Singularity.

Moreover, singularity as a command line link, is provided, and maintains as much of the CLI and environment functionality as possible.

Important

As a direct consequence of all the information previously reported, during the rest of the documentation all the command examples always use the singularity command.

How to build a Singularity or Apptainer container image on your local machine

In this section the building procedure of container images with one between Singularity or Apptainer on your local machine is explained.

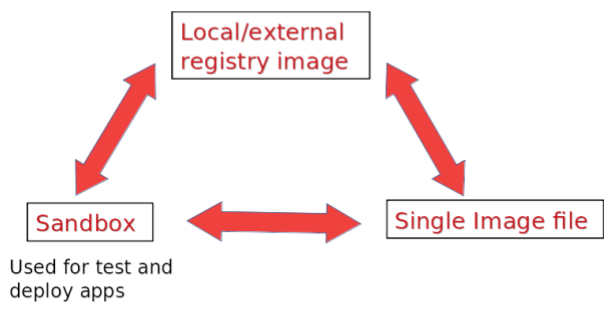

A Singularity container image can be built in differnt ways. The simplest command used to build is:

$ sudo singularity build [local options...] <IMAGE PATH> <BUILD SPEC>

the build command can produce containers in 2 different output formats. Format can be specified by passing the fllowing build option:

default: a compressed read-only Singularity Image Format (SIF), suitable for production. This is an immutable object.

sandbox: a writable (ch)root directory called sandbox, used for interactive development. To create those kind of output format use the

--sandboxbuild option.

The build spec target defines the method that build uses to create the container. All the methods are listed in the table:

Build method |

Commands |

|---|---|

Beginning with library to build from the Container Library |

|

Beginning with docker to build from Docker Hub |

|

Path to an existing container on your local machine |

|

Path to a directory to build from a sandbox |

|

Path to a SingularityCE definition file |

|

Since build can accept an existing container as a target and create a container in any of these two formats, you can convert an existing .sif container image to a sandbox and viceversa.

Some experienced Docker users may be in possession of Docker images not available on any container registry (e.g. custom container images): those users will take benefits from the possibility to convert Docker images into Singluarity image files.

Convert Docker container images into Singularity image files

Important

Before following this procedure, ensure that both Docker and one between Singularity or Apptiner are installed on your local system: the installation instructions can be found on the Docker official documentation and on the Singularity official admin guide or on the Apptaniner official admin guide respectively.

Verify that the image exist by looking at the output of the docker command line utility.

sudo docker image ls

Remember to annotate the ID of the desired image

Generate a

.tararchive form the desired image using the following command:

sudo docker image save <image-ID> <image-name>.tar

Build a Singularity image file starting from the freshly generated archive with:

sudo singularity build <image-name>.sif <image-name>.tar

At the end of a successfull building process, if not needed for other purposes, remove the Docker image archive.

Change the ownership to the Singularity image file to be able to move it to a remote host without any permissions related issues.

sudo chown $(id -nu):$(id -ng) <image-name>.sif

For further informations on how to move files between local systems and one of the CINECA’s clusters, visit the Data Transfer section.

Understanding Singularity or Apptainer definition file for building container images

The Definition File (or “def file” for short) is like a set of blueprints explaining how to build a custom container image including specifics about the base Operative System to build or the base container to start from, as well as software to install, environment variables to set at runtime, files to add from the host system, and container metadata.

A definition file is divided into two parts:

Parts

Purpose

Header

Sections

%character followed by the name of the particular section.All sections are optional, and a def file may contain more than one instance of a given section.

A definition file may look like this:

# This is a comment

# -- HEADER begin --

Bootstrap: docker

From: ubuntu:{{ VERSION }}

Stage: build

# -- HEADER end --

# -- SECTIONS begin --

%arguments

VERSION=22.04

%setup

touch /file1

touch ${APPTAINER_ROOTFS}/file2

%files

/file1

/file1 /opt

%environment

export LISTEN_PORT=54321

export LC_ALL=C

%post

apt-get update && apt-get install -y netcat

NOW=`date`

echo "export NOW=\"${NOW}\"" >> $APPTAINER_ENVIRONMENT

%runscript

echo "Container was created $NOW"

echo "Arguments received: $*"

exec echo "$@"

%startscript

nc -lp $LISTEN_PORT

# -- SECTIONS end --

For further informations on how to write a custom definition file, users are strongly encouraged to visit the dedicated page both on the official Singularity user guide or the official Apptainer user guide.

A quick outline over SPACK, a package management tool compatible with Singularity, which can be used to deploy entire software stacks inside a container imageis provided.

Advanced Singularity or Apptainer container build with Spack

Spack (full documentation here) is a package manager for Linux and macOS, able to download and compile (almost!) automatically the software stack needed for a specific application. It is compatible with the principal container platforms (Docker, Singularity), meaning that it can be installed inside the container and in turn be used to deploy the necessary software stack inside the container image. This can be utterly useful in a HPC cluster environment, both to install applications as a root (inside the container), and to keep a pletora of ready-available software stacks (or even application built with different software stack versions) living in different containers (regardless of the outside environment).

Getting Spack is an easy and fast three steps process:

Install the necessary dependencies, eg. on Debian/Ubuntu:

apt update; apt install build-essential ca-certificate coreutils curl enviroment-modules gfortran git gpg lsb-release python3 python3-distutils python3-venv unzip zip.Clone the repository:

git clone -c feature.manyFiles=true https://github.com/spack/spack.git.Activate spack, eg: for bash/zsh/sh:

source /spack/share/spack/setup-env.sh.

The very same operations can be put in the %post section of a Singularity definition file to have an available installation of Spack at the completion of the built. Alternatively, one can bootstrap from an image containing spack only and start from there the built of the container. For example:

sudo singularity build --sandbox <container_img> docker://spack/ubunty-jammy

Spack Basic Usage

$ spack install openmpi@4.1.5+pmi fabrics=ucx,psm2,verbs schedulers=slurm %gcc@8.5.0

Generally speaking, the deployment of a software stack installed via spack is based on the following steps:

Build a container image.

Get spack in your container.

Install the software stack you need.

In practice, and if foresight of building an immutable SIF container image for compiling and running an application, one can proceed as follow:

Get

sandboxcontainer image hodling an installation of spack and open a shell withsudoand writable privileges (sudo singularity shell --writable <my_sandbox>).Write a

spack.yamlfile for a spack environment listing all the packages and compilers your application would need (more detaile here).- Execute

spack concretizeandspack install, if the installation goes through and you are application can compile and run you are set to go: either transform your sandobox

.siffile fixing the changes to a conteiner image.or, for a clean build, copy the

spacl.yamlfile in the conteiner in the specific%in a*.siffile fixing the changes to a conteiner imagefile section, activate spack and executespack concretizeandspack install.

- Execute

Following, a minimal example of a Singularity definition file: we bootstrap from a docker container holding a clean installation of ubuntu:22.04, we copy a ready made spack.yaml file in the container, get spack therein and use it to install the software stack as delineated in the spack.yaml file.

Bootstrap: docker

From: ubuntu:22.04

%files

/some/example/spack/file/spack.yaml /spacking/spack.yaml

%post

### We install and activate Spack

apt-get update

apt install -y apt install build-essential ca-certificates coreutils curl environment-modules gfortran git gpg lsb-release python3 python3-distutils python3-venv unzip zip

git clone -c feature.manyFiles=true https://github.com/spack/spack.git

source /spack/share/spack/setup-env.sh

### We pretentiously deploy a software stack in a Spack environment

spack env activate -d /spacking/

spack concretize

spack install

%environment

### Custom evironment variables should be set here

export VARIABLE=MEATBALLVALUE

Bindings

A Singularity container image provides a standalone environment for software handling. However, it might still need files from the host system, as well as write privileges at runtime. As pointed out above, this last operation is indeed available when working with a sandbox, but it is not for an (immutable) SIF object. To provide for these needs, Singularity grants the possibility to mount files and directories from the host to the container.

In the default configuration, the directories

$HOME,/tmp,/proc,/sys,/dev, and$PWDare among the system-defined bind pathsThe

SINGULARITY_BINDenvironment variable can be set (in the host) to specify the bindings. The argument for this option is a comma-delimited string of bind path specifications in the formatsrc[:dest[:opts]]where src and dest are paths outside and inside of the container respectively; the dest argument is optional, and takes the same values as src if not specified. For example:$ export SINGULARITY_BIND=/path/in/host:mount/point/in/container.Bindings can be specified on the command line when a container instance is started via the

--bindoption. The structure is the same as above, eg. singularity shell--bind /path/in/host:/mount/point/in/container <container_img>.

Enviroment variables

Environment variables inside the container can be set in a handful of ways, see also here. At build time they should be specified in the %environment section of a Singularity definition file. Most of the variables from the host are then passed to the container except for PS1, PATH and LD_LIBRARY_PATH which will ne modified to contain default values; to prevent this behavior, one can use the --cleanenv option, to start a container instance with a clean environment. Further environment variables can be set, and host variables can be overwritten at runtime in a handful of ways:

Scope |

CLI Flag/Host-side variables |

|---|---|

Directly pass an environment variables to the containerized application |

|

Directly pass a list of environment variables held in a file to the containerized application |

|

Automatically pass host-side defined variable to the containerized application |

export SINGULARITYENV_MYVARIABLE=myvalue on host machineresults in

MYVARIABLE=myvalue inside the container |

With respect to special PATH variables:

Scope |

Host-side variables |

|---|---|

Append to the |

|

Prepend to the |

|

Override the |

export SINGULARITYENV_LD_LIBRARY_PATH=</path/to/append/>NOTE

By default, inside the container the

LD_LIBRARY_PATH is set to /.singularity/libs.Users are strongly encouraged to inlude also this path when setting

SINGULARITYENV_LD_LIBRARY_PATH |

As a last disclaimer, we point out two additional variables which can be set in the host to manage the building process:

Scope |

Host-side variables |

|---|---|

Pointing to a directory used for caching data from the build process |

|

Pointing to a directory used for temporary build of the squashfs system |

|

Note

All the aforementioned variables containing the SINGULARITY word can be interpred and correctly applied by Apptainer.

However, Apptainer may complain about using those variables instead of using the Apptainer’s specific ones: to do so, users have to simply replace the occurance of SINGULARITY with APPTAINER.

Containers in HPC environment

In this sections, all the information necessary for the execution of Singularity or Apptainer containers along with all the container platform flags are reported to perform their execution on CINECA’s clusters.

In order to move locally built SIF images on CINECA’s clusters, consult the “Data Transfer” page under the File Systems and Data Management section.

However, Singularity allows pulling existing container images from container registries as the one seen in the third section. Pulling container images from registries can be done on CINECA’s cluster via the following command synthax:

singularity pull registry://path/to/container_img[:tag]

This will create a SIF file in the directory where the command was run allowing the user to run the image just pulled.

The MPI implementation used in the CINECA clusters is OpenMPI (as opposed to MPICH). Singularity offers the possibility to run parallel applications compiled and installed in a container using the host MPI installation, as well as the bare metal capabilities of the host such as the Infiniband computer networking communication standard. This is the so called Singularity hybrid approach where the OpenMPI installed in the container and the one on the host work in tandem to instantiate and run the job, see also the documentation.

Note

Keep in mind that when exploiting the Singularity hybrid approach, the necessary MPI libraries from the host are automatically bound above the ones present in the container.

The only caveat is that the two installations (container and host) of OpenMPI have to be compatible to a certain degree. The (default) installation specifics for each cluster are here listed:

Cluster |

OpenMPI version |

PMI implementation |

Specifics |

Tweaks |

|---|---|---|---|---|

Galileo100 |

4.1.1 |

pmi2 |

--with-pmi--with ucx--with-slurm |

|

Leonardo |

4.1.6 |

pmix_v3 |

--with ucx--with-slurm--with-cuda * |

|

Pitagora |

4.1.6 |

pmix_v3 |

--with ucx--with-slurm--with-cuda * |

export PMIX_MCA_gds=hash ** |

* only available in boost_* partitions.

** suppres PMIX WARNING when using srun.

Note

Even if the host and container hold different versions of OpenMPI, the application might still run in parallel, but at a reduced speed, as it might not be able to exploit the full capabilities of the host bare metal installation.

A suite of container images holding compatible OpenMPI versions for the CINECA clusters are available at the NVIDIA catalog, on which we dwell in the next section.

To run GPU applications on accelerated clusters on first has to check his container image holds a compatible version of CUDA. The specifics are listed in the following table:

Driver Version |

CUDA Version |

GPU Model |

|

|---|---|---|---|

Galileo100 |

470.42.01 |

11.4 |

NVIDIA V100 PCIe3 32 GB |

Leonardo |

535.54.03 |

12.2 |

NVIDIA A100 SXM6 64 GB HBM2 |

Pitagora |

565.57.01 |

12.7 |

NVIDIA H100 SXM 80GB HBM2e |

while the CUDA compatibility table is:

CUDA Version |

Required Drivers |

|---|---|

CUDA 12.x |

from 525.60.13 |

CUDA 11.x |

from 450.80.02 |

One can surely install a working version of CUDA on his own, for example via Spack. However, a simple and effective way to obtain a container image provided with a CUDA installation is to bootstrap from an NVIDIA HPC SDK docker container, which already comes equipped with CUDA, OpenMPI and the NVHPC compilers. Such containers are available at the NVIDIA catalog. Their tag follows a simple structure, $NVHPC_VERSION-$BUILD_TYPE-cuda$CUDA_VERSION-$OS, where:

$BUILD_TYPE: can either take the value devel or runtime. The first ones are usually heavier and employed to compile and install applications. The second ones are lightweight containers for deployment, stripped of all the compilers and applications not needed at runtime execution.$CUDA_VERSION: an either take a specific value (e.g. ) or be amulti. The multi flavors hold up to three different CUDA version, and as such are much heavier. However, they can be useful to deploy the same base container on HPC with different CUDA specifics or to try out the performance of the various versions.

In the following we provide a minimal Singularity definition file following the above principles, namely: bootstrap from a develop NVIDIA HPC SDK container, install the needed applications, copy the necessary binaries and files for runtime, pass to a lightweight container. This technique is called multistage build, more information available here.

Bootstrap: docker

From: nvcr.io/nvidia/nvhpc:23.1-devel-cuda_multi-ubuntu22.04

Stage: build

%files

### Notice the asterisk when copying directories

/directory/with/needed/files/in/host/* /destination/directory/in/container

/our/application/CMakeLists.txt /opt/app/CMakeLists.txt

/some/example/spack/file/spack.yaml /spacking/spack.yaml

%post

### We install and activate Spack

apt-get update

apt install -y build-essential ca-certificates coreutils curl environment-modules gfortran git gpg lsb-release python3 python3-distutils python3-venv unzip zip

git clone -c feature.manyFiles=true https://github.com/spack/spack.git

. /spack/share/spack/setup-env.sh

### We pretentiously deploy a software stack in a Spack environment

spack env activate -d /spacking/

spack concretize

spack install

### Make and install our application

cd /opt/app && mkdir build

cd build

cmake -DCMAKE_INSTALL_PREFIX=/opt/app_binaries ..

make -j

make install

###########################################################################################

### We now only need to copy the necessary binaries and libraries for runtime execution ###

###########################################################################################

Bootstrap: docker

From: nvcr.io/nvidia/nvhpc:23.1-runtime-cuda11.8-ubuntu22.04

Stage: runtime

%files from build

/spacking/* /spacking/

/opt/app_binaries/* /opt/app_binaries/

Execute containerized application in an HPC environment

As explained in the previous section as well as in the documentation, if the MPI library installed in the container is compatible with that of the host system, Singularity will take care by itself of binding the necessary libraries to allow a parallel containerized application to run exploiting the cluster infrastructure. In practical terms, this means that one just need to launch it as:

mpirun -np $nnodes singularity exec <container_img> <container_cmd>

In comparison, the following code snippet will launch the application using MPI inside the container, thus effectively running on a single node:

singularity exec <container_img> mpirun -np $nnodes <container_cmd>

Regarding launching containerized applications needing GPU support, again Singularity is capable of binding the necessary libraries on its own,

provided a compatible software version in the container and host has been deployed; full documentation is available here.

To achieve this, one just need to add the --nv or the --nvccli flag on the command line, namely:

mpirun -np $nnodes singularity exec --nv <container_img> <container_cmd>

Important

In most recent versions of both Singularty and Apptainer, the --nv flag used for NVIDIA GPUs, has been replaced by the --nvccli flag.

Note

Similarly to what said about the Singularity hybrid approach in the”Parallel MPI Container” tab, for GPU parallel programs, the necessary CUDA drivers and libraries from the host are automatically bound and employed inside the container provided the --nv or --nvccli flag is used when starting a container instance.

e.g. $ singularity exec --nv <container_img> <container_cmd>.

Cluster specific tweaks

In this section the specific version of Singularity or Apptainer installed on each CINECA’s cluster are reported along with some useful information to help users properly executing their containerized applications.

On Galileo100, Singularity 3.8.0 is available on the login nodes and on the partitions.

Beware that, for the Galileo100 cluster, nodes with GPU are available under both the Interactive Computing service and by requesting the g100_usr_intercative Slurm partition with one main difference:

Platform |

Maximum number of GPUs per Job |

|---|---|

Interactive Computing service |

2 |

|

1 |

The necessary MPI, Singularity and CUDA modules are the following:

module load profile/advanced(profile with additional modules)

module load autoload singularity/3.8.0--bind--openmpi--4.1.1

module load cuda/11.5.0

Note

The module load autoload singularity/3.8.0--bind--openmpi--4.1.1 command automatically loads the following modules:

singularity/3.8.0--bind–openmpi–4.1.1zlib/1.2.11--gcc–10.2.0openmpi/4.1.1--gcc--10.2.0-cuda–11.1.0

The following code snippet is an example of a Slurm job script for running MPI parallel containerized applications on the Galileo100 cluster.

Notice that the --cpus-per-task option has been set to 48 to fully exploit the CPUs in the g100_usr_prod partition.

#!/bin/bash

#SBATCH --nodes=6

#SBATCH --ntasks-per-node=1

#SBATCH --cpu-per-task=48

#SBATCH --mem=30GB

#SBATCH --time=00:10:00

#SBATCH --out=slurm.%j.out

#SBATCH --err=slurm.%j.err

#SBATCH --account=<Account_name>

#SBATCH --partition=g100_usr_prod

module purge

module load profile/advanced

module load autoload singularity/3.8.0--bind--openmpi--4.1.1

module load cuda/11.5.0

mpirun -np 6 singularity exec <container_img> <container_cmd>

Necessary modules and Slurm job script example

On Leonardo, Singularity PRO 4.3.0 is availabe on the login nodes and on the partitions. The necessary MPI, Singularity and CUDA modules are the following:

module load hpcx-mpi/2.19

module load cuda/12.2

The following code snippet is an example of a Slurm job script for running MPI parallel containerized applications on the Leonardo cluster with GPU support. In order to equally and fully exploit the 32 cores and 4 GPUs of the boost_usr_prod partition, one needs to set --ntasks-per-node=4, --cpu-per-task=8 and --gres=gpu:4.

As a redundant but necessary measure, we also set the number of threads to eight manually via export OMP_NUM_THREADS=8.

#!/bin/bash

#SBATCH --nodes=6

#SBATCH --ntasks-per-node=4

#SBATCH --cpu-per-task=8

#SBATCH --gres=gpu:4

#SBATCH --mem=30GB

#SBATCH --time=00:10:00

#SBATCH --out=slurm.%j.out

#SBATCH --err=slurm.%j.err

#SBATCH --account=<Account_name>

#SBATCH --partition=boost_usr_prod

export OMP_NUM_THREADS=8

module purge

module load hpcx-mpi/2.19

module load cuda/12.2

mpirun -np 6 singularity exec --nv <container_img> <container_cmd>

As explained above, provided the container and host OpenMPI share a compatible pmi, the application can be launched via the srun command after having allocated the necessary resources. For example:

salloc -t 03:00:00 --nodes=6 --ntasks-per-node=4 --ntasks=24 --gres=gpu:4 -p boost_usr_prod -A <Account_name>

<load the necessary modules and/or export necessary variables>

export OMP_NUM_THREADS=8

srun --nodes=6 --ntasks-per-node=4 --ntasks=24 singularity exec --nv <container_img> <container_cmd>

tab under constuction

On Pitagora, Apptainer 1.4.0 is available on the login nodes and on the partitions.